Vinayak

Program Management & Operations | Indian Air Force | Synopsys | Wich Please

B. Tech ECE, IIIT - Hyderabad

PG D in Aeronautical Engineering, Air Force Technical College (VTU)

PROJECTS

Android Based Smart Wheel Chair (Prototype)

This project was selected for the World Finals of World Embedded Software Contest organized by Korean embedded Software Industry Council and finished among top 4 international teams and single team from India.

The main focus of this project was to assist a handicapped person who can't move on his/her own.

Project aims to utilize processing power lying untapped in present generation smart phones to help in navigation and localization of automated vehicles. Navigation algorithm is implemented as an Android app running on a smart phone to navigate the Wheel Chair.

Microcontroller is paired with the phone via Bluetooth and serves as a processing unit for Control Subsystem. Any Android based smart phone can be paired with Control Subsystem provided it has a decent camera and meet certain minimum specifications. An Android App is developed which can be downloaded on any smart phone. Phone is kept on the wheel chair itself.

This app utilizes a Navigation Algorithm developed by us and uses phone’s camera to guide a Control System. Assuming we know the current position, destination position is provided through SMS or by other means. There are markers/identifiers placed or every door. Marker is a unique pattern of white and black boxes which indicate a particular door, marker is identified from camera using image processing tools. For a particular set of starting and destination position we can generate a unique sequence of door numbers to reach a destination.

After identifying the particular doors we can easily find our position on the given map. We can detect obstacles present in the path through sensors and avoid them to reach the targeted destination. Motion of the wheel chair will be controlled through Microcontroller using Motors attached to both the wheels. Android App will control Microcontroller through a Wireless (Bluetooth) channel.

Technologies used: ARToolKit, Android SDK, ATMEL Microcontroller, Serial Bluetooth.

Period: September 2011- November 2011

Work Flow:

These translation coordinates from phone were then sent to micro controller via bluetooth and accordingly signals were sent to motors.

*Please refer to the Research section for complete wheelchair project.

AR Marker

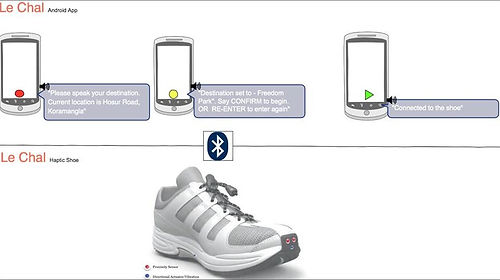

Le'Chal: Shoe for visually handicapped

This project was developed at the MIT media labs workshop held at College of Engineering, Pune India, January 2011. This project was awarded among the Top Innovators of India under 35 list in Technical Review magazine published by Massachusetts Institute of Technology(MIT).

Main motivation of the project was to come up with an assistive model that can help the visually impaired people to navigate easily, avoid obstacles. Shoes assist the Kane. The innovation is that the person is guided with the help of haptic actuators present inside the shoe and gps of the phone unlike many products are out there which help through voice signals.

The most difficult problems that the blind usually face when they navigate is orientation and direction, as well as obstacle detection.

The shoe basically guides the user on the foot on which he’s supposed to take a turn. This is for direction. The shoe also keeps vibrating if you’re not oriented in the direction of your initial path, and will stop vibrating when you’re headed in the right direction. It basically brings the wearer back on track as we check orientation at regular intervals.

The Project consists of 2 major parts.

- Navigation along with orientation.

-

Obstacle detection and avoidance.

Technologies Used: Android SDK, Arduino UNO, array of SONARS, CMOS IR Camera, Serial Bluetooth, OpenCV, Google maps API, pressure sensrors, Haptic actuators, LASER plane projector.

Destination is given through android app on the phone then from the GPS of phone the signals are sent to processing unit in the shoe via bluetooth and accordingly actuators guides the person. (click to see full picture.)

Inside structure of a shoe with sensors and processing unit. (click to see the full picture)

*Please refer to the Research section for the Complete detailed research on this project.

Video of the first prototype

Real Time Vision Based NO Ball Detector

This was the other project that I did during my internship. In cricket if a NO-Ball is bowled it takes considerable amount of time to be checked by the Third Umpire. So, idea was to develop a non obtrusive system that can immediately tell the field Umpire just after stepping on the Popping crease if the ball bowled is a fine/No Ball.

Rule: A ball is NO Ball(by over stepping) when the bowling foot is ahead of the popping crease.

So the problem is divided in 2 parts:

- Detecting the position of bowling foot of the bowler.

-

Detecting which foot of the bowler is the bowling foot.

Technology used: A high FPS IR filtered camera, Laser plane projector, OpenCV, PS3EYE Camera.

We hacked PS3EYE Camera and introduced a IR filter so as to diminish the effect of variation of sunlight and drilled it into the middle stump. For testing we used a modified patch for PS3 driver in OpenCV library to make it work at 125 frames per second. Laser Plane projecting module was fixed at the bottom of the middle stump just 1 cm above the ground. Using standard Blob detection method with some morphological filters Blobs were detected with very high precision. Since distance of crease is fixed from the stumps as of ICC standards, we made a mathematical model which depended on the position of PS3eye camera on the stump. . We also calibrated the crease in camera coordinates system so that even if stump gets rotated or tilted by some degrees the distance of the foot blobs automatically gets corrected according to the new position of the camera.

Problem: How to detect which blob is the bowling foot blob and at what time does the distance of the blob should be noted so that the error becomes ZERO.

We studied and observed that when the ball is just released from the bowler's hand his bowling foot is near the crease so that is the moment when correct distance should be noted. Therefore we used ball detection method which gave very accurate results when high FPS cameras were used. we tried two methods for ball detection.

- Standard method using Graph Cut, Background subtraction, filters, Hough circle detection and color filter.

- Second method we used predator's(Open TLD) algorithm based on Machine learning.

Testing our code for Ball detection using PS3 EYE camera.

Ball detection using Standard OpenCV shape detection method.

Ball detection using Open TLD algorithm based on Machine Learning.

Automatic Climate Control in a Green House

The motivations behind this project are as follows:

- Enable people to grow plants that require specific climatic conditions.

- Reduction of cost of vegetables, flowers and herbs which normally do not grow in arid and semi arid regions.

- Reduced wastage of water by using its optimum amount for plants.

This project qualified among the top 15 teams for the Final round of TI Analog Design Contest 2011 organized by Texas Instruments, India and won the consolation prize.

Goal was to design a greenhouse that can efficiently control all the critical parameters, mainly Temperature, soil humidity and air humidity with minimum errors. We were trying to improve on costs. For these we implemented our own indigenous systems, like air heater, humidity generator, soil humidity sensors, and irrigation system.

- Temperature Module

- Air Humidity Module

- Soil Humidity Module

Software Implementation of whole system:

Technologies used: TI MSP430 microprocessor, Temperature Sensors, Motor Drivers, Air Humidity Sensor, Square wave generator, etc.

Video of the prototype.

The solution to this problem is divided into three different modules. (click the following links to see the block diagrams of each module)

Academic Projects

- Extended Kalman Filter Localization

Mobile Robots are prone to errors in their motion. These errors continue to add up due to surrounding environment. Kalman filters are used to make measurements regarding the position of the mobile robot and make corrections in the perceived location of the robot, which is inaccurate due to the error in motion. In EKF Localisation the linear predictions in Kalman Filter is replaced by their non-linear generalization.

This project involved a simulation platform called ARIA, and the mobile robot used was the AmigoBot. Sonar readings after giving motion commands to a robot on a simulator (ARIA) at four intervals of time were obtained. Given that the obstacles were static, the sonar readings were used to set up the extended kalman filter. Using the Kalman filter, it was shown that there was an improvement in the localization estimate after the kalman filtering as compared to that before it.

- Mapping

Given an unknown environment, a robot equipped with sonar range scanners was patrolled around the map and using the readings, a probability distribution was followed to update the probability of an obstacle being present on a discrete grid. Dispersion was assumed in the sonar propagation and the probabilities were accordingly updated. Frontiers were found from each vantage point for the robot and the robot was then teleported to each frontier, to simplify the problem.

Different strategies for picking frontiers were used, including Depth First Search and Breadth First Search. Both the strategies were compared on a standard set of maps on the basis of percentage of territory explored.

- Image Search

The system performs an image search similar to Google image search where user can search for similar images in a database by providing an input search image rather than a search string. We used CALTECH-101 dataset for testing and training the descriptors. I mage search algorithm was implemented by two different methods.

- Method 1- Bag of Words (BoW) Algorithm using SIFT and SURF descriptors, and Nearest Neighbour Classification. Inspired by natural language processing literature, this model discards all spatial information in an image. Instead, the image is considered as a set (i.e. bag) of features (the words in our model).

- Method 2- Global Features using PHoG and SIFT descriptors, and SVM Classification. In this method the histograms computed in Bag of Words Algorithm were done with spatial info. The image is scaled at different levels and histograms at all the levels are computed. Though computationally expensive its accuracy is considerably higher.

We tested a variety of features, SIFT, SURF, PHoG and GIST to represent a training image adequately. Every descriptor has its drawbacks. Some are computationally expensive while others are not accurate enough. PHoG is the best descriptor with maximum average accuracy of 68% and it is also not as computationally expensive as SIFT. The best classified object was found out to be helicopter with the classification of 19 images out of 22 using PHoG.

Technologie used: OpenCV, Matlab

- Panorama Building / Image Stitching

The system employs computer vision algorithms to compute a mosaic image out of individual images of a bigger scene, finding common intersection points across images and stitching them together a single panorama image. Input is given as 'N' number of images to be stitched.

The first step is to create a feature vector space for the images considered of 64D/128D vectors after converting the image to gray scale.

The second step is to find common interest points from these feature vectors. These interest points are stable under local and global perturbations in the image domain, including deformations as those arising from perspective transformations (sometimes reduced to affine transformations, scale changes, rotations and/or translations) as well as illumination/brightness variation. Then a random selection of the common interest points is made.

Comparing the feature vectors of the common interest points from the images, we compute the homography between views using sparse feature matches selected through Approximate Nearest Neighbour criterion with a robust estimator like RANSAC (RAndom SAmpling and Consensus).

Using the homography matrix, the images are joined to create the panorama. We observed and compared the performance of both SIFT and SURF descriptors.

Sample Dataset

Intermediate Matching Process

Output: Using standard homographic technique

Output: Using improvised homographic technique

SIFT and SURF have their pros and cons each. While SIFT is slower, it gives better results. SURF is much faster, in comparison, however, its results are weaker than that of SIFT. When the settings of the images being considered are changed SIFT out performs SURF case of Scaling, Rotation and Blur. while SURF performs best in case of Illumination.

Technologies used: OpenCV, Matlab

- Obstacle Avoidance in Dynamic Velocity Environments

Given Obstacles with time varying velocities, the challenge was to plan the motion of an omnidirectional robot such that it reaches its destination in minimum time while avoiding the obstacles. This is done by first expanding the obstacles to include the size of the robot. Then, considering the robot as a point object, using relative velocities we find a velocity cone which represents the danger zone as far as collisions are concerned. This is done for each obstacle and hence the obstacles are avoided.

Whenever an obstacle changes velocity, an interrupt is raised and the above computation is repeated.

Technologies used: OpenCV

- Audio communication using LASER

Audio communication was done using Laser on the concept of Amplification of Audio Signal, filtering circuits were used to filter out the noise signals. Also auto alignment of laser source was done if it gets deviated by using servo motor which was interfaced to microcontroller.

Technologies used: Atmel microcontroller, LASER module, servo motors.

- Intelligent Visitor Counter and Room Light Controller

The aim of this project is to accurately measure the number of people currently present inside some room. We accomplished this by counting the number of people entering or exiting the room out of the door. We demonstrated the project by installing the device on the two doors of a room, At the Entry if someone goes inside the counter was incremented by one similarly at the Exit if some one leaves the counter was decremented by one . We also demonstrated that output Power of the room can also be controlled by controlling lighting inside the room which was proportional to the number of people inside the room.

Motivation: A lot of power is wasted when the lights and cooling systems are left on in the absence of somebody in the room. To avoid this we built this system to count the number of people inside the room. This can be further extrapolated to airport terminals or railway stations to increase or decrease power consumption.

Technologies used: IR sensors, TSOP's, Atmel Microcontroller.